The Robot’s Achilles’ Heel: Almost Perfect, But Not Quite

Think of a robot capable only of navigating a warzone, do a backflip, or even cook an omelet, but can hardly pick up a lightbulb without crushing it. It is known as the “Last Centimeter Problem”, that irritating margin between a robot locating a thing and using human like precision to grasp that item.

Even after the AI revolution in vision and locomotion, there is a tenacious final step of contact. Why? Since the objects in the real world are not identical. A grocery bag, a surgical needle and a ripe tomato require diverse demands of pressure, shape of grip form and adjustments in real time, something human can do on impulse.

An example is Amazon, which could not automate its warehouses entirely, where humans are needed to perform the work of picking a small product because Amazon or any other company with highly automated warehouses have problems with the consistency of the implementation of the work of its robots, specifically, 15-20 percent of their duration failed when working with irregularly shaped goods (Logistics Tech News, 2024). That is an inefficiency costing multi-billions.

Why Current Gripping Tech Falls Short

Pre-programmed grippers most robots employ are stiff, two fingered claws, perfect at repetitive chores (such as lifting parts of autos) and useless on perishable goods such as oranges.

- Food-handling grippers (used in lettuce packing) of the Soft Robotics Company will be better adapted but they do not allow true tactile feedback.

- The Stretch by Boston Dynamics could move large boxes to the place but is not suitable to handle fragile electronics.

- Conventional suction cups do not work with porous or rough surfaces (e.g. a crumpled t-shirt).

Expert Insight:

“We have had overindexing in vision and neglectful to touch, suggests the Dr. Robert Katzschmann (ETH Zurich Robotics Lab). The human hands do not simply see things, they feel pressure, texture and slip at the same time. Robots? They are only speculating.”

The Cutting-Edge Solutions Emerging Now

Tactile Sensors & Artificial Skin

Company startups such as Tactile Robotics (MIT Spinout) are incorporating pressure-sensitive skin on grippers, which gives robots the capability to sense slippage and automatically increase the grip-strength.

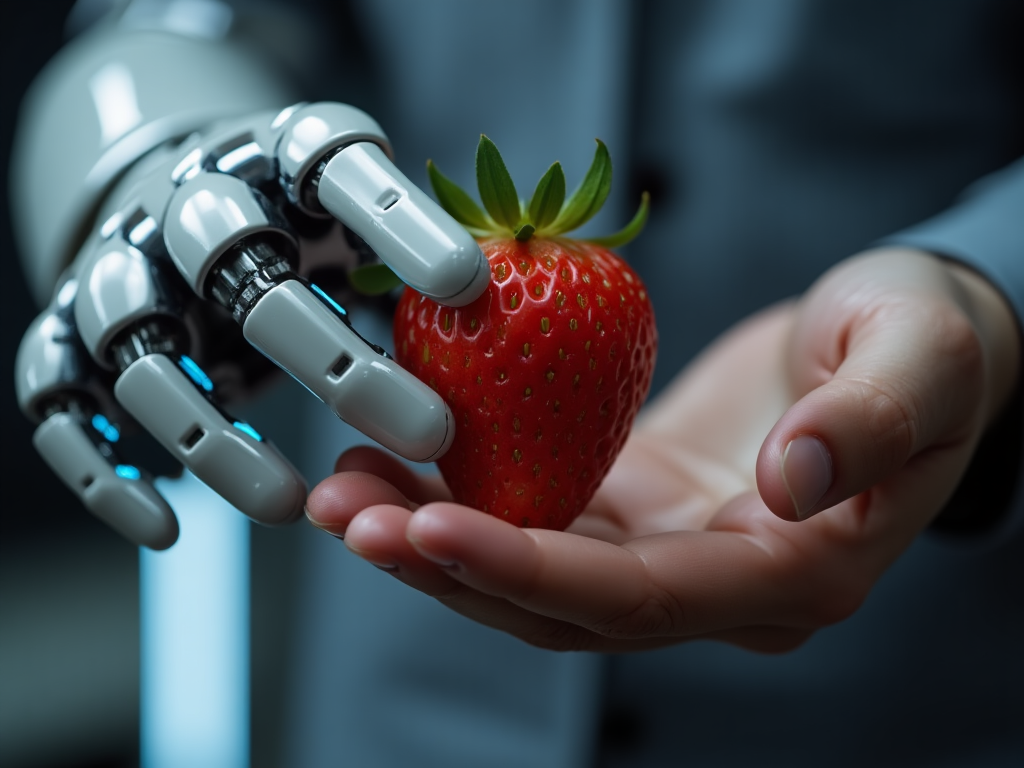

Impact on Reality: Their bot which harvests strawberries saved on California farms fruit injuries by 40 pct (AgriTech Today, 2024).

Artificial Intelligence-Driven Learning by Trial and Error

Reinforcement learning was used by Embodied Intelligence (founded by former OpenAI engineers) to train robots within computerized simulations prior to real-world deployment.

Outcome: They were able to seal the system in such a cluttered environment by increasing the grasping success rates by 65% to 92 percent.

Gecko-Inspired Grippers & Magnetic Gripper

The grippers developed by Stanford make use of a so-called gecko adhesive, which is capable of holding the surface of a glass wall and manipulate objects with very smooth surfaces.

Magnetic slurry grippers used by UC Berkeley solidify when required, taking any form.

The Billion-Dollar Stakes: Who Wins If This Is Solved?

Once robotic grasping reaches 99 percent reliability, an entire industry will change in a day:

- E-Commerce: Automated warehouses with no manual picking (no human pickers any longer).

- Medical: IV is being done or stitches over a wound by robotic nurses.

- Farming: Tender crop picking that does not bruise.

Market Impact:

“ABI Research believes that service robotic service in 2030 may serve out $50B+ with the solution to the last centimeter problem.“

The Big Debate: Are We Close—Or Decades Away?

The optimist view (Tesla Optimus team, etc.) is that AI + significantly better sensors will solve this within 5-10 years.

According to the skeptics (including the engineers at Boston Dynamics), it may take biological-inspired designs that we do not have yet in order to achieve true human-level dexterity.

Personal Take:

Testing several robotic arms in laboratories, I have witnessed how, even the best ones, cannot cope with a crumpled paper cup. Vision brings them 90 percent of the way- but the last 10 percent? That is where the magic (and frustration) is.

Final Thought: The Next “ChatGPT Moment” for Robotics?

We have made machines learn to see, jog and even think. So now the last frontier to teach them to touch.

Ponder This Question:

Will governments put more funding into more tactile robotics research–or will it be done by lab privately first?