Take the example of a robot that is capable of making a car but unable to choose a ripe strawberry. It would simply crush it. This is the great paradox in Robotics over the decades. Machines have laser sharp vision. They have superhuman power. But they are not mindful of the physical world. They lack a sense of touch. This is now changing. Machines are getting the capacity to feel through a silent revolution. It is the genetic code to the new generation of the useful AI Robotics.

Why Vision Just Isn’t Enough

The views of a robot are fantastic, as there are cameras and lasers. But it’s a flat, silent picture. Consider needle threading. You do not look you feel the resistance of the thread. You can feel the slight trembling that it passes through the eye. It is the critical data touch that is provided. It is concerning pressure, texture, and slip. In its absence, all interactions are guesses. Powerful grip turns into a destructive power. This sensory fault has confined the robots to hard and predictable chores.

It is not about what is it anymore, but how do I deal with it? – The Head at MIT at the CSAIL lab.

The skin revolution is the artificial skin revolution

A new level of perception is being developed by engineers. They are developing synthetic skin. This isn’t science fiction. As an example, MIT created a sensor named GelSight. It makes use of a camera at the back of a soft gel. This produces a three dimensional map of any surface that it touches. At Carnegie Mellon they are in the meantime printing pliable “e-skin.” Pressure, shear and twist forces can be experienced with this material. Such technologies serve the function of the nervous system of a robot. They transform physical touch into computerised information.

The Intelligence Which Learns to Read Hands

Information is nothing without interpretation. This is where AI emerges to be the brain. It is this stream of tactile information that is processed by new machine learning models. They are taught to identify trends. How does it feel to start a slip? What is the extent of force indicators of fruit bruise? A firm known as SynTouch has constructed a collection of such sensations. Their systems of AI Robotics are able to recognize hundreds of materials merely by touching them. It is not the machine that is blindly feeling. It’s learning to understand.

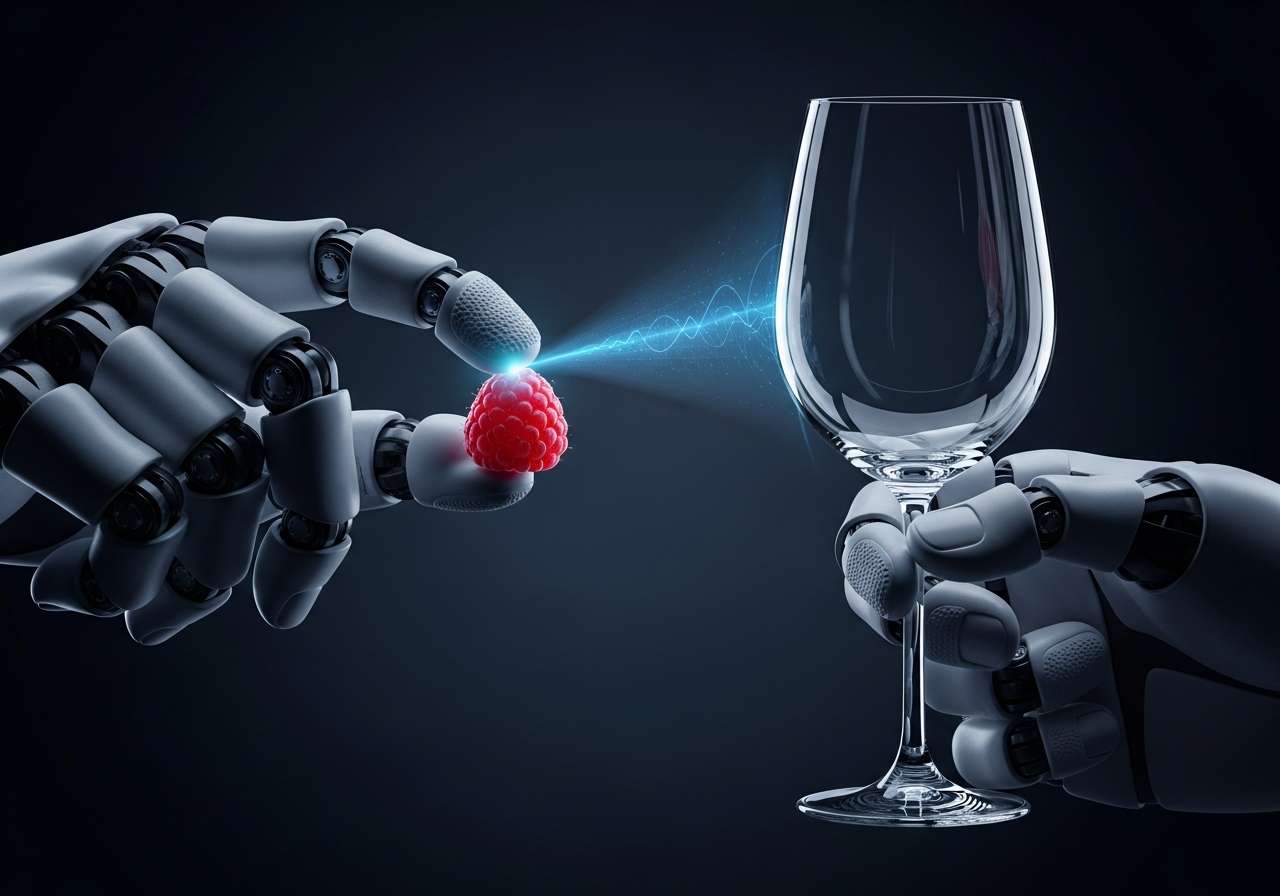

The Berry Picking Robot Case Study

Take a real life issue in California. A start up had to mechanize the harvesting of the raspberries. Such berries are extremely delicate. A vision system would be able to find an ideal berry. However, the gripper of the robot would always break it. The object was a tactile fingertip. This was a sensor used to determine the firmness of the berry in real-time. The grip force was then automatically regulated by the AI. The result? Long fruitful summer, adverse-free. This was the breakthrough of tactile feedback. It made out of an awkward machine a fine harvester.

From Farm to Operating Room

The applications are going out of control. In farming, such firms as John Deere are investigating tactile weeders. These machines sense the distinction between vegetation stem and the soil. Da Vinci surgical is incorporating touch in medicine. It is possible that surgeons will be able to feel a tissue remotely soon. This has the potential to distinguish the normal and abnormal cells. Robots are now able to pack mixed items, even in the field of logistics. With hands they are very careful of a lightbulb and a heavy tool.

An unexpected Obstacle: Sensory Overload

Sensitivity is not the greatest challenge. It’s selectivity. Excessive sensory information to a robot can paralyze it. What does it consider to be important?

- Filtering Noise: The system should not take note of the sensation of air.

- Paying attention to Signal: It has to pay attention to the critical slip of a scalpel.

It is this selective attention that we are teaching AI to behave like. It is one of the main issues of any future Humanoid Robot that is to navigate the world.

The Robot with the Humanoid that Squeezes Your Hand

This technology is critical in the coming generation of Humanoid Robots. Suppose you had a helper robot at home. It has to shake your hand, but not to kill it. It must wash a fine wine glass. Robot has to help an elderly individual on a chair. All these processes require a tender adaptive hand. This bodily empathy is necessary in true collaboration. Robots will cease being servants.

Conclusion: More Than Just Machines

It is not merely that we are creating more superior robots. We are mediating a basic divide between the virtual and the physical. Providing machines with a sense of touch is a significant move. It requires us to think intelligently. With robots acquiring this much-human sense, what do we do? Their winning will not be gauged in speed or power. It will be gauged in their daintliness and perception. The future of AI Robotics does not merely require being intelligent. It needs to be sensitive.